Exploring Bark, the Open Source Text-to-Speech Model

Table of contents

Introduction

The AI boom has brought us a lot of tools, from LLM's to image generators. Amidst these innovations, text-to-speech technology remains crucial for accessibility, marketing, education and more. However, most high quality TTS models cost money and not open source.

Enter Bark AI, an open-source model developed by Suno.

So, what can we create with bark?

Bark AI opens up a world of possibilities for developers, content creators, and businesses alike.

Bark AI is not only about converting text into speech; it goes a step further by introducing emotional nuances such as laughing, sighing, and crying into the audio. This capability allows for more realistic and engaging voice outputs, significantly enhancing the current features available in the market.

How to Use Bark AI

We will be using the example from the Bark github repository, you can use Google Colab to run it.

So let's set our Google colab:

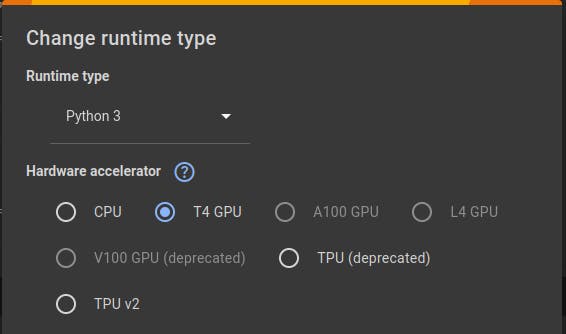

Click Runtime then change runtime type and choose GPU

!nvidia-smiInstall using pip

!pip install git+https://github.com/suno-ai/bark.gitImport the libraries

from bark import SAMPLE_RATE, generate_audio, preload_models from scipy.io.wavfile import write as write_wav from IPython.display import AudioDownload and load the models

preload_models()Generate the audio from text

# generate audio from text text_prompt = """ Hello, my name is Ibra. And, uh — and I like to code. [laughs] But I also have other hobbies like Bjj. """ audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_6") # save audio to disk write_wav("bark_generation.wav", SAMPLE_RATE, audio_array)

This is a simple example. If you want to see the true potential of Bark, check out the video below: